蒙版弹幕功能可以在弹幕经过画面中的主体区域(如人像)时,智能地将其隐藏,实现“弹幕绕人走”的效果,从而在保证弹幕互动性的同时,避免遮挡视频的核心内容,极大地提升用户观看体验。该功能尤其适用于人物访谈、舞蹈视频、游戏直播等需要突出画面主体的场景。效果演示:

本文将指导您如何基于应用中已有的弹幕渲染功能,实现播放器 SDK 提供的蒙版弹幕能力。

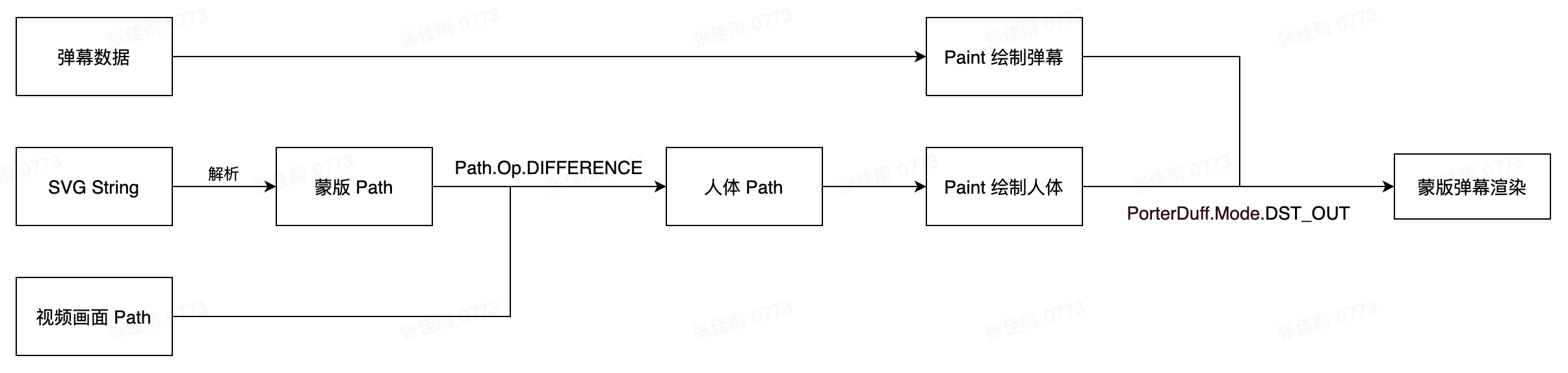

技术原理

蒙版弹幕的实现,是播放器 SDK 与您的弹幕渲染模块协作完成的:

SDK 输出蒙版信息:播放器 SDK 会根据当前视频帧的时间戳,实时输出一个描述画面主体轮廓的蒙版信息(一个 SVG 字符串)。SVG 示例如下:

<svg version="1.0" xmlns="http://www.w3.org/2000/svg" width="455px" height="256px" viewBox="0 0 455 256" preserveAspectRatio="xMidYMid meet"> <g transform="translate(0,256) scale(0.1,-0.1)" fill="#000000"> <path d="M0 1280 l0 -1280 694 0 695 0 19 53 c11 28 36 84 55 122 20 39 45 104 56 145 18 67 48 157 96 290 9 25 22 52 30 60 42 49 89 220 99 363 7 100 51 230 89 264 12 11 63 33 112 47 50 15 117 35 150 45 56 17 60 20 63 51 3 30 -10 73 -61 195 -11 28 -24 70 -27 95 -4 25 -13 66 -21 92 -10 38 -11 61 0 125 14 91 17 187 7 224 -6 18 -3 31 9 42 20 21 68 22 84 3 25 -30 66 -29 133 5 112 57 218 33 288 -65 19 -27 61 -78 92 -113 36 -40 60 -77 64 -98 7 -37 -8 -121 -31 -169 -8 -17 -21 -59 -29 -94 -8 -34 -23 -77 -35 -95 -68 -108 -71 -126 -23 -164 20 -16 69 -47 107 -68 39 -20 106 -61 150 -90 44 -29 99 -62 122 -74 23 -11 48 -30 56 -41 11 -16 21 -19 40 -15 38 10 91 -11 132 -53 46 -47 57 -73 44 -110 -22 -65 -123 -81 -154 -24 l-14 27 -1 -35 c0 -19 7 -67 15 -105 8 -39 15 -86 15 -105 1 -33 4 -36 65 -64 119 -53 150 -110 120 -218 -17 -63 -87 -220 -111 -248 -12 -15 -114 -190 -114 -196 0 -2 331 -4 735 -4 l735 0 0 1280 0 1280 -2275 0 -2275 0 0 -1280z"/> </g> </svg>上述 SVG 效果图如下:

弹幕层解析与绘制:您的弹幕渲染模块接收到这个 SVG 字符串后,需要:

- 解析 SVG:将其中的路径信息(

<path d=...>)解析为 Android 的Path对象,这个Path代表了画面中主体(如人像)的轮廓。 - 正常绘制弹幕:在画布上正常绘制您的弹幕内容。

- 应用蒙版:使用

Paint的PorterDuff.Mode.DST_OUT模式,将上一步解析出的主体Path绘制在弹幕之上。最终实现弹幕被主体轮廓区域“裁切”掉的视觉效果,如下图所示。

- 解析 SVG:将其中的路径信息(

前提条件

- 已实现弹幕渲染:本文档假设您的 App 中已经有了一套自己的弹幕渲染系统(即您有一个用于显示弹幕的

UIView)。 - 完成服务端配置:

- 生成蒙版文件:您需要通过视频点播媒体处理服务为视频生成蒙版弹幕文件。

- 正确签发 PlayAuthToken(仅限 Vid 模式):如果您使用 Vid 模式播放,应用服务端在为客户端签发 PlayAuthToken 时,必须额外签入

"NeedBarrageMask": true字段。

实现步骤

步骤 1:添加项目依赖

在您 App 模块的 build.gradle 文件的 dependencies 代码块中,添加开源弹幕库的依赖:

// app/build.gradle dependencies { // ... other dependencies implementation group: 'com.github.ctiao', name: 'dfm', version: '0.9.25' }

DrawHandler 和 IRenderer 等类均来源于此库。

步骤 2:创建 XML 布局

为了实现蒙版效果,您需要在布局中将视频渲染层和弹幕蒙版层进行叠加。通常使用 FrameLayout 来实现。

SurfaceView(或TextureView)用于播放视频。CoverView(将在下一步创建)是一个自定义 View,浮于视频之上,用于绘制弹幕并应用蒙版。

<!-- activity_main.xml --> <FrameLayout android:layout_width="match_parent" android:layout_height="wrap_content"> <!-- 用于播放视频的 View --> <SurfaceView android:id="@+id/surfaceView" android:layout_width="match_parent" android:layout_height="294dp" android:layout_gravity="center" /> <!-- 用于绘制弹幕和蒙版的自定义 View --> <com.bytedance.volc.voddemo.CoverView android:id="@+id/coverView" android:layout_width="match_parent" android:layout_height="294dp" android:layout_gravity="center" /> </FrameLayout>

步骤 3:实现弹幕 CoverView

创建一个名为 CoverView.java 的自定义 View,负责接收蒙版 Path 数据,并在 onDraw 方法中实现弹幕绘制和蒙版混合。以下是 CoverView 的完整示例代码。请注意,为了演示蒙版效果,示例代码中用一个红色圆球代替了真实的弹幕绘制逻辑。您需要将其替换为您自己的弹幕 Paint。

package com.bytedance.volc.voddemo; import android.annotation.SuppressLint; import android.content.Context; import android.graphics.Canvas; import android.graphics.Color; import android.graphics.Paint; import android.graphics.Path; import android.graphics.PorterDuff; import android.graphics.PorterDuffXfermode; import android.os.Build; import android.os.Handler; import android.os.HandlerThread; import android.os.Looper; import android.util.AttributeSet; import android.view.MotionEvent; import android.view.View; import java.util.ArrayList; import java.util.LinkedList; import java.util.List; import java.util.Locale; import master.*; import master.flame.danmaku.controller.DrawHandler; import master.flame.danmaku.controller.DrawHelper; import master.flame.danmaku.controller.IDanmakuView; import master.flame.danmaku.controller.IDanmakuViewController; import master.flame.danmaku.danmaku.model.BaseDanmaku; import master.flame.danmaku.danmaku.model.IDanmakus; import master.flame.danmaku.danmaku.model.android.DanmakuContext; import master.flame.danmaku.danmaku.parser.BaseDanmakuParser; import master.flame.danmaku.danmaku.renderer.IRenderer; import master.flame.danmaku.danmaku.util.SystemClock; import master.flame.danmaku.ui.widget.DanmakuTouchHelper; public class CoverView extends View implements IDanmakuView, IDanmakuViewController { public static final String TAG = "DanmakuView"; private DrawHandler.Callback mCallback; private HandlerThread mHandlerThread; protected volatile DrawHandler handler; private boolean isSurfaceCreated; private boolean mEnableDanmakuDrwaingCache = true; private OnDanmakuClickListener mOnDanmakuClickListener; private float mXOff; private float mYOff; private OnClickListener mOnClickListener; private DanmakuTouchHelper mTouchHelper; private boolean mShowFps; private boolean mDanmakuVisible = true; protected int mDrawingThreadType = THREAD_TYPE_NORMAL_PRIORITY; private Object mDrawMonitor = new Object(); private boolean mDrawFinished = false; protected boolean mRequestRender = false; private long mUiThreadId; private int mWidth; private int mHeight; private List<Path> mMasks = new ArrayList<>(); public CoverView(Context context) { super(context); init(); } private void init() { mUiThreadId = Thread.currentThread().getId(); setBackgroundColor(Color.TRANSPARENT); setDrawingCacheBackgroundColor(Color.TRANSPARENT); DrawHelper.useDrawColorToClearCanvas(true, false); mTouchHelper = DanmakuTouchHelper.instance(this); } public CoverView(Context context, AttributeSet attrs) { super(context, attrs); init(); } public CoverView(Context context, AttributeSet attrs, int defStyle) { super(context, attrs, defStyle); init(); } public void addDanmaku(BaseDanmaku item) { if (handler != null) { handler.addDanmaku(item); } } @Override public void invalidateDanmaku(BaseDanmaku item, boolean remeasure) { if (handler != null) { handler.invalidateDanmaku(item, remeasure); } } @Override public void removeAllDanmakus(boolean isClearDanmakusOnScreen) { if (handler != null) { handler.removeAllDanmakus(isClearDanmakusOnScreen); } } @Override public void removeAllLiveDanmakus() { if (handler != null) { handler.removeAllLiveDanmakus(); } } @Override public IDanmakus getCurrentVisibleDanmakus() { if (handler != null) { return handler.getCurrentVisibleDanmakus(); } return null; } public void setCallback(DrawHandler.Callback callback) { mCallback = callback; if (handler != null) { handler.setCallback(callback); } } @Override public void release() { stop(); if (mDrawTimes != null) mDrawTimes.clear(); } @Override public void stop() { stopDraw(); } public void updateMasks(List<Path> masks) { mMasks.clear(); mMasks.addAll(masks); invalidate(); } private synchronized void stopDraw() { if (this.handler == null) { return; } final DrawHandler h = this.handler; final HandlerThread handlerThread = this.mHandlerThread; this.mHandlerThread = null; this.handler = null; unlockCanvasAndPost(); if (h != null) { h.quit(); h.post(new Runnable() { @Override public void run() { h.removeCallbacksAndMessages(null); if (handlerThread != null) { handlerThread.quit(); } } }); } } protected synchronized Looper getLooper(int type) { if (mHandlerThread != null) { mHandlerThread.quit(); mHandlerThread = null; } int priority; switch (type) { case THREAD_TYPE_MAIN_THREAD: return Looper.getMainLooper(); case THREAD_TYPE_HIGH_PRIORITY: priority = android.os.Process.THREAD_PRIORITY_URGENT_DISPLAY; break; case THREAD_TYPE_LOW_PRIORITY: priority = android.os.Process.THREAD_PRIORITY_LOWEST; break; case THREAD_TYPE_NORMAL_PRIORITY: default: priority = android.os.Process.THREAD_PRIORITY_DEFAULT; break; } String threadName = "DFM Handler Thread #" + priority; mHandlerThread = new HandlerThread(threadName, priority); mHandlerThread.start(); return mHandlerThread.getLooper(); } private void prepare() { if (handler == null) { handler = new DrawHandler(getLooper(mDrawingThreadType), this, mDanmakuVisible); } } @Override public void prepare(BaseDanmakuParser parser, DanmakuContext config) { prepare(); handler.setConfig(config); handler.setParser(parser); handler.setCallback(mCallback); handler.prepare(); } @Override public boolean isPrepared() { return handler != null && handler.isPrepared(); } @Override public DanmakuContext getConfig() { if (handler == null) { return null; } return handler.getConfig(); } @Override public void showFPS(boolean show) { mShowFps = show; } private static final int MAX_RECORD_SIZE = 50; private static final int ONE_SECOND = 1000; private LinkedList<Long> mDrawTimes; protected boolean mClearFlag; private float fps() { long lastTime = SystemClock.uptimeMillis(); mDrawTimes.addLast(lastTime); Long first = mDrawTimes.peekFirst(); if (first == null) { return 0.0f; } float dtime = lastTime - first; int frames = mDrawTimes.size(); if (frames > MAX_RECORD_SIZE) { mDrawTimes.removeFirst(); } return dtime > 0 ? mDrawTimes.size() * ONE_SECOND / dtime : 0.0f; } @Override public long drawDanmakus() { if (!isSurfaceCreated) { return 0; } if (!isShown()) { return -1; } long stime = SystemClock.uptimeMillis(); lockCanvas(); return SystemClock.uptimeMillis() - stime; } @SuppressLint("NewApi") private void postInvalidateCompat() { mRequestRender = true; if (Build.VERSION.SDK_INT >= 16) { this.postInvalidateOnAnimation(); } else { this.postInvalidate(); } } protected void lockCanvas() { if (mDanmakuVisible == false) { return; } postInvalidateCompat(); synchronized (mDrawMonitor) { while ((!mDrawFinished) && (handler != null)) { try { mDrawMonitor.wait(200); } catch (InterruptedException e) { if (mDanmakuVisible == false || handler == null || handler.isStop()) { break; } else { Thread.currentThread().interrupt(); } } } mDrawFinished = false; } } private void lockCanvasAndClear() { mClearFlag = true; lockCanvas(); } private void unlockCanvasAndPost() { synchronized (mDrawMonitor) { mDrawFinished = true; mDrawMonitor.notifyAll(); } } @Override protected void onDraw(Canvas canvas) { super.onDraw(canvas); // 您需要自行往 mMasks 的 arraylist 里面添加字幕 Path if ((!mDanmakuVisible) && (!mRequestRender)) { super.onDraw(canvas); return; } Paint paint = new Paint(); paint.setColor(Color.WHITE); paint.setStyle(Paint.Style.FILL); paint.setXfermode(new PorterDuffXfermode(PorterDuff.Mode.DST_OUT)); for (final Path path1 : mMasks) { canvas.drawPath(path1, paint); } mRequestRender = false; unlockCanvasAndPost(); } @Override protected void onLayout(boolean changed, int left, int top, int right, int bottom) { super.onLayout(changed, left, top, right, bottom); if (handler != null) { mWidth = right - left; mHeight = bottom - top; handler.notifyDispSizeChanged(this.mWidth, this.mHeight); } isSurfaceCreated = true; } public void toggle() { if (isSurfaceCreated) { if (handler == null) { start(); } else if (handler.isStop()) { resume(); } else { pause(); } } } @Override public void pause() { if (handler != null) { handler.removeCallbacks(mResumeRunnable); handler.pause(); } } private int mResumeTryCount = 0; private Runnable mResumeRunnable = new Runnable() { @Override public void run() { DrawHandler drawHandler = handler; if (drawHandler == null) { return; } mResumeTryCount++; if (mResumeTryCount > 4 || CoverView.super.isShown()) { drawHandler.resume(); } else { drawHandler.postDelayed(this, 100 * mResumeTryCount); } } }; @Override public void resume() { if (handler != null && handler.isPrepared()) { mResumeTryCount = 0; handler.post(mResumeRunnable); } else if (handler == null) { restart(); } } @Override public boolean isPaused() { if (handler != null) { return handler.isStop(); } return false; } public void restart() { stop(); start(); } @Override public void start() { start(0); } @Override public void start(long position) { Handler handler = this.handler; if (handler == null) { prepare(); handler = this.handler; } else { handler.removeCallbacksAndMessages(null); } if (handler != null) { handler.obtainMessage(DrawHandler.START, position).sendToTarget(); } } @Override public boolean onTouchEvent(MotionEvent event) { boolean isEventConsumed = mTouchHelper.onTouchEvent(event); if (!isEventConsumed) { return super.onTouchEvent(event); } return isEventConsumed; } public void seekTo(Long ms) { if (handler != null) { handler.seekTo(ms); } } public void enableDanmakuDrawingCache(boolean enable) { mEnableDanmakuDrwaingCache = enable; } @Override public boolean isDanmakuDrawingCacheEnabled() { return mEnableDanmakuDrwaingCache; } @Override public boolean isViewReady() { return isSurfaceCreated; } @Override public int getViewWidth() { return super.getWidth(); } @Override public int getViewHeight() { return super.getHeight(); } @Override public View getView() { return this; } @Override public void show() { showAndResumeDrawTask(null); } @Override public void showAndResumeDrawTask(Long position) { mDanmakuVisible = true; mClearFlag = false; if (handler == null) { return; } handler.showDanmakus(position); } @Override public void hide() { mDanmakuVisible = false; if (handler == null) { return; } handler.hideDanmakus(false); } @Override public long hideAndPauseDrawTask() { mDanmakuVisible = false; if (handler == null) { return 0; } return handler.hideDanmakus(true); } @Override public void clear() { if (!isViewReady()) { return; } if (!mDanmakuVisible || Thread.currentThread().getId() == mUiThreadId) { mClearFlag = true; postInvalidateCompat(); } else { lockCanvasAndClear(); } } @Override public boolean isShown() { return mDanmakuVisible && super.isShown(); } @Override public void setDrawingThreadType(int type) { mDrawingThreadType = type; } @Override public long getCurrentTime() { if (handler != null) { return handler.getCurrentTime(); } return 0; } @Override @SuppressLint("NewApi") public boolean isHardwareAccelerated() { // >= 3.0 if (Build.VERSION.SDK_INT >= 11) { return super.isHardwareAccelerated(); } else { return false; } } @Override public void clearDanmakusOnScreen() { if (handler != null) { handler.clearDanmakusOnScreen(); } } @Override public void setOnDanmakuClickListener(OnDanmakuClickListener listener) { mOnDanmakuClickListener = listener; } @Override public void setOnDanmakuClickListener(OnDanmakuClickListener listener, float xOff, float yOff) { mOnDanmakuClickListener = listener; mXOff = xOff; mYOff = yOff; } @Override public OnDanmakuClickListener getOnDanmakuClickListener() { return mOnDanmakuClickListener; } @Override public float getXOff() { return mXOff; } @Override public float getYOff() { return mYOff; } public void forceRender() { mRequestRender = true; handler.forceRender(); } }

步骤 4:在 Activity 中配置蒙版功能

在您的 Activity 或 Fragment 中,初始化播放器并完成蒙版相关的所有配置。

说明

以下代码依赖于“参考信息”章节中的 getTextureRealRectF 方法和 DanmakuMaskParseUtil 工具类,请先将它们添加到您的项目中。

// 在 Activity 的 onCreate 或 Fragment 的 onViewCreated 方法中 // 1. 获取 View 实例 CoverView coverView = findViewById(R.id.coverView); // ... 初始化 TTVideoEngine 实例 ... // 2. 初始化蒙版模块 // 此选项用于初始化蒙版功能所需的内部线程和资源,必须在播放前设置。 ttVideoEngine.setIntOption(TTVideoEngine.PLAYER_OPTION_ENABLE_OPEN_MASK_THREAD, 1); // 3. 设置蒙版回调 // 这是连接 SDK 与您的弹幕 View 的桥梁 ttVideoEngine.setMaskInfoListener(new MaskInfoListener() { @Override public void onMaskInfoCallback(int code, int pts, String svgInfo) { if (code != 0 || svgInfo == null || svgInfo.isEmpty()) { return; } // a. 获取视频和容器的当前尺寸 int videoWidth = ttVideoEngine.getVideoWidth(); int videoHeight = ttVideoEngine.getVideoHeight(); int containerWidth = coverView.getWidth(); int containerHeight = coverView.getHeight(); // b. 计算视频画面在容器中的实际显示区域 RectF videoRealRect = getTextureRealRectF(IMAGE_LAYOUT_ASPECT_FIT, videoWidth, videoHeight, containerWidth, containerHeight); // c. 解析 SVG 数据,转换为适配屏幕的 Path DisplayMetrics dm = getResources().getDisplayMetrics(); Path path = DanmakuMaskParseUtil.parseSVG(svgInfo, videoRealRect, dm.widthPixels, dm.heightPixels); // d. 将 Path 更新到 CoverView 以触发重绘 List<Path> list = new ArrayList<>(); list.add(path); coverView.updateMasks(list); } }); // 4. 设置蒙版功能的初始状态 // 设置为 1 表示默认开启,播放开始后就会收到回调。 // 您也可以在这里设置为 0,后续在播放中通过 UI 按钮等方式动态开启。 ttVideoEngine.setIntOption(TTVideoEngine.PLAYER_OPTION_ENABLE_OPEN_BARRAGE_MASK, 1);

步骤 5:设置视频源与蒙版源

根据您的播放方式,设置视频源和蒙版源。

- 对于 Vid 播放源,SDK 会自动关联蒙版信息,您无需额外设置蒙版 URL。

- 对于 Url 播放源,除了设置视频源

StrategySource,还需额外调用setBarrageMaskUrl来设置蒙版文件的地址。DirectUrlSource directUrlSource = new DirectUrlSource.Builder() .setVid(vid) .addItem(new DirectUrlSource.UrlItem.Builder() .setUrl(url) .setCacheKey(cacheKey) .build()) .build(); engine.setStrategySource(directUrlSource); // 关键:设置蒙版资源文件的 URL 地址 engine.setBarrageMaskUrl(barrageMaskUrl);

参考信息

您需要将以下辅助函数和工具类添加到您的项目中。

getTextureRealRectF:计算视频画面的实际显示矩形。

public static RectF getTextureRealRectF(int displayMode, int videoWidth, int videoHeight, int containerWidth, int containerHeight) { final float videoRatio = videoWidth / (float) videoHeight; final float containerRatio = containerWidth / (float) containerHeight; final int displayWidth; final int displayHeight; switch (displayMode) { case IMAGE_LAYOUT_TO_FILL: displayWidth = containerWidth; displayHeight = containerHeight; break; case IMAGE_LAYOUT_ASPECT_FILL_X: displayWidth = containerWidth; displayHeight = (int) (containerWidth / videoRatio); break; case IMAGE_LAYOUT_ASPECT_FILL_Y: displayWidth = (int) (containerHeight * videoRatio); displayHeight = containerHeight; break; case IMAGE_LAYOUT_ASPECT_FIT: if (videoRatio >= containerRatio) { displayWidth = containerWidth; displayHeight = (int) (containerWidth / videoRatio); } else { displayWidth = (int) (containerHeight * videoRatio); displayHeight = containerHeight; } break; case IMAGE_LAYOUT_ASPECT_FILL: if (videoRatio >= containerRatio) { displayWidth = (int) (containerHeight * videoRatio); displayHeight = containerHeight; } else { displayWidth = containerWidth; displayHeight = (int) (containerWidth / videoRatio); } break; default: throw new IllegalArgumentException("unknown displayMode = " + displayMode); } int left = (containerWidth - displayWidth) / 2; int top = (containerHeight - displayHeight) / 2; return new RectF(left, top, left + displayWidth, top + displayHeight); }

DanmakuMaskParseUtil.java:用于解析 SVG 的工具类。可将 SVG 数据转换为适配视频画面的蒙版Path。

public class DanmakuMaskParseUtil { private static final String NODE_PATH_START = "d"; private static final String NODE_WIDTH = "width"; private static final String NODE_HEIGHT = "height"; private static final String TRANSFORM = "transform"; private static final String SCALE = "scale"; private static final String TRANSLATE = "translate"; private static final Matrix mMatrix = new Matrix(); // BarrageMask Step 5 public static Path parseSVG(String svgData, RectF videoRectF, int screenWidth, int screenHeight) { if (TextUtils.isEmpty(svgData) || videoRectF == null) { return new Path(); } Path fullPath = new Path(); float videoViewWidth = videoRectF.width(); float videoViewHeight = videoRectF.height(); float videoLeftPosition = videoRectF.left; float videoTopPosition = videoRectF.top; final String targetSvgData = getSuitSvgStr(svgData); InputStream inputStream = new ByteArrayInputStream(targetSvgData.getBytes(Charsets.UTF_8)); try { final Document document = DocumentBuilderFactory.newInstance() .newDocumentBuilder() .parse(inputStream); final String xpathExpression = "//@*"; final XPath xPath = XPathFactory.newInstance().newXPath(); final XPathExpression expression = xPath.compile(xpathExpression); final NodeList svgPaths = (NodeList) expression.evaluate(document, XPathConstants.NODESET); int maskWidth = 1; int maskHeight = 1; String transform = ""; List<Path> pathList = new ArrayList<>(); // parse get mask path for (int i = 0; i < svgPaths.getLength(); i++) { final Node node = svgPaths.item(i); switch (node.getNodeName()) { case NODE_PATH_START: final Path path = PathParser.createPathFromPathData(node.getTextContent()); pathList.add(path); break; case NODE_WIDTH: maskWidth = Integer.parseInt(node.getTextContent().replace("px", "")); break; case NODE_HEIGHT: maskHeight = Integer.parseInt(node.getTextContent().replace("px", "")); break; case TRANSFORM: transform = node.getTextContent(); break; } } float svgTranslateX = 0; float svgTranslateY = 0; float svgScaleX = 1; float svgScaleY = 1; if (!TextUtils.isEmpty(transform)) { Point translate = getTransform(transform, TRANSLATE); if (translate != null) { svgTranslateX = translate.x; svgTranslateY = translate.y; } Point scale = getTransform(transform, SCALE); if (scale != null) { svgScaleX = scale.x; svgScaleY = scale.y; } } if (pathList.size() > 0) { RectF fullRectF = new RectF(videoRectF); fullRectF.left = Math.max(fullRectF.left, 0); fullRectF.top = Math.max(fullRectF.top, 0); if (screenHeight != 0 && screenWidth != 0) { if (fullRectF.right > fullRectF.bottom) { fullRectF.right = Math.min(screenHeight, videoRectF.right); fullRectF.bottom = Math.min(screenWidth, videoRectF.bottom); } else { fullRectF.right = Math.min(screenWidth, videoRectF.right); fullRectF.bottom = Math.min(screenHeight, videoRectF.bottom); } } fullPath.addRect(fullRectF, Path.Direction.CW); } // get human face path for (final Path originPath : pathList) { mMatrix.reset(); mMatrix.postScale(svgScaleX, svgScaleY); mMatrix.postTranslate(svgTranslateX, svgTranslateY); final float scaleX = videoViewWidth * 1.0f / maskWidth; final float scaleY = videoViewHeight * 1.0f / maskHeight; if (scaleX < scaleY) { mMatrix.postScale(scaleX, scaleX); } else { mMatrix.postScale(scaleY, scaleY); } mMatrix.postTranslate(videoLeftPosition, videoTopPosition); Path path = new Path(originPath); path.transform(mMatrix); // human face path if (Build.VERSION.SDK_INT >= 21) { fullPath.op(path, Path.Op.DIFFERENCE); } } } catch (Exception e) { e.printStackTrace(); } finally { try { inputStream.close(); } catch (IOException e) { e.printStackTrace(); } } return fullPath; } private static Point getTransform(final String transformStr, final String fun) { int index = transformStr.indexOf(fun); if (index == -1) { return null; } String valueStr = transformStr.substring(index + fun.length()); String regex = "\\((\\-|\\+)?\\d+(\\.\\d+)?,(\\-|\\+)?\\d+(\\.\\d+)?\\)"; Pattern compile = Pattern.compile(regex); Matcher matcher = compile.matcher(valueStr); if (!matcher.lookingAt()) { return null; } int end = matcher.end(); String[] result = valueStr.substring(0, end).split(","); if (result.length != 2) { return null; } String x = result[0].substring(1); String y = result[1].substring(0, result[1].length() - 1); return new Point( Float.parseFloat(x), Float.parseFloat(y)); } private static String getSuitSvgStr(String originStr) { final int index = originStr.indexOf("</svg>"); if (index != -1) { return originStr.substring(0, index + 6); } return originStr; } private static class Point { private float x; private float y; public Point(final float x, final float y) { this.x = x; this.y = y; } } }