实时音视频

实时音视频

实时音视频

文档指南

请输入

实时音视频

产品动态

快速入门

场景方案

开发指南

Token 鉴权

音频管理

视频管理

屏幕共享

客户端 API 参考

服务端 API 参考

房间管理

转推直播

输入在线媒体流

实时数据监控

历史数据查询

业务标识管理

实时消息通信

历史版本(文档停止维护)

2023-08-01

2023-06-01

转推直播

2022-06-01

2020-12-01

转推直播

输入在线媒体流

获取数据指标

实时消息通信

常见问题

集成相关

质量相关

音视频互动智能体(原实时对话式 AI)

开始使用

进阶功能

API 参考

硬件对话智能体

产品计费

实时信令

互动白板

客户端 API 参考

Open API 参考

即时通讯 IM

快速开始

客户端 API 参考

服务端 API 参考

服务端 OpenAPI

成员相关

会话成员管理

会话相关

消息相关

用户相关

关于产品

- 文档首页

集成实时对话式 AI(软件应用)

本文介绍如何结合火山引擎 RTC SDK 和服务端 OpenAPI,快速构建具备超低延时、实时交互能力的对话式 AI 应用。

适用平台

此集成方案适用于 Android、iOS、Windows、macOS、Linux、Web、Electron、Flutter、微信小程序、Unity、抖音小游戏、React Native 平台。

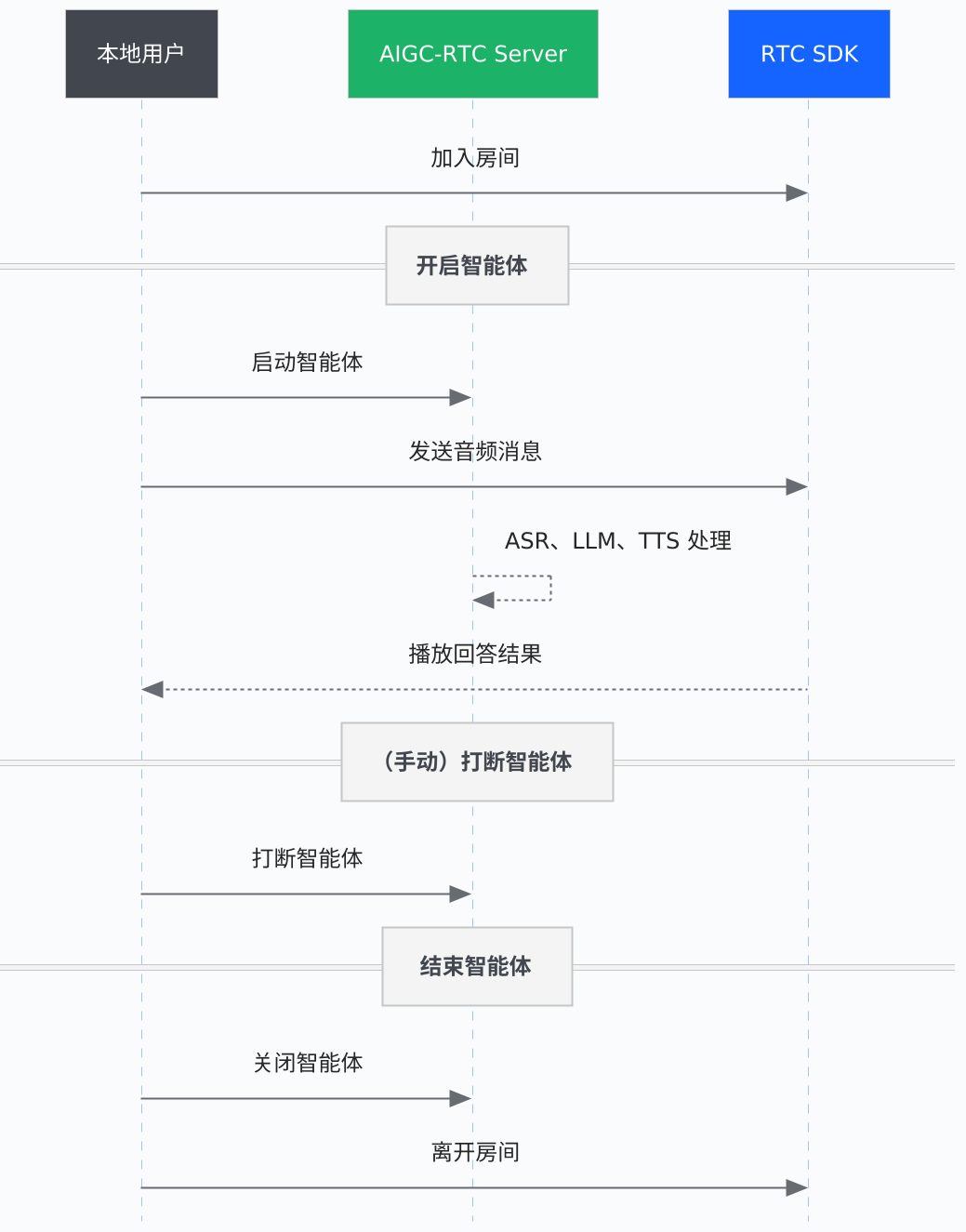

业务流程

AI 实时互动的实现流程如下图所示:

集成指引

前提条件

- 已开通 RTC、ASR、TTS 和 LLM 服务并配置权限账号。具体操作,参看前置准备。

- 已生成 RTC SDK 进房鉴权 Token。具体操作,参看使用 Token 完成鉴权。

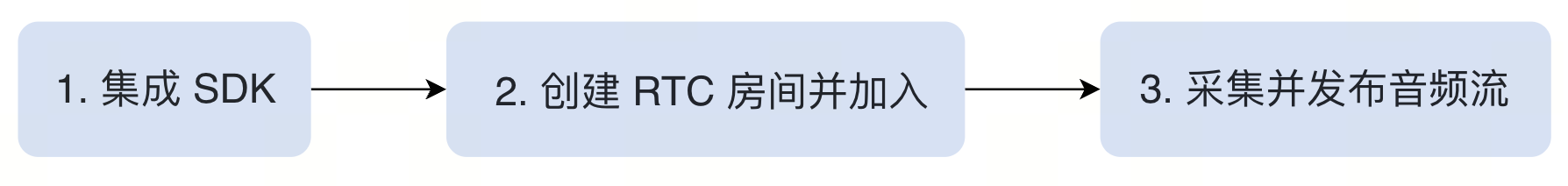

一、实现音视频通话

首先,需要在你的应用中集成 RTC SDK,实现基础的音视频通话能力,为 AI 智能体的加入创建环境。主要分为以下三个核心环节:

快速实现

请根据您的客户端平台(iOS、Android、Web 等),集成 RTC SDK 实现音视频通话能力。

关键配置与注意事项

- 订阅与发布流:推荐使用默认配置,即自动订阅音频流和自动发布音频流。

- 若有高音质要求:若你搭建的 AI 对话场景对音频质量有更高要求,如需要播放音乐,可调用

setAudioScenario将手机音量类型切换为媒体音量。 - 若需静音真人用户:使用

muteAudioCapture静音麦克风以保证最低的切换延迟。不建议使用stopAudioCapture,该操作可能会导致声音卡顿或变声。

二、发起 AI 实时对话

构建音视频通话后,在服务端调用 StartVoiceChat 接入智能体,实现用户与智能体通话。

- OpenAPI 调用方法(必须鉴权):如何调用 OpenAPI。

- 快速获取请求体:前往控制台_跑通 Demo 按照界面提示完成各项配置进行调试。调试成功后,点击页面右上角的接入代码示例,在配置服务端参数区域获取请求体代码。

说明

如果发起 OpenAPI 接口请求时返回 200,但是智能体未进入房间或进入房间未正常工作,可参考智能体未进房或未正常工作?。

至此,你已实现房间内真人用户和智能体的实时对话。

三、进阶功能

除了基本的 AI 对话能力,你还可以集成更多高级功能来提升用户体验,例如实时字幕、打断智能体、接收智能体状态、视觉理解等。支持的功能及具体实现,可参看进阶功能。

四、结束对话、退出房间

默认情况下,若真人用户退房,180 s 后智能体任务会自动停止,但该 180s 内仍会计费。对话结束后,为避免不必要的资源消耗和计费,你可以:

- 调用 StopVoiceChat 接口结束当前服务端智能体任务。

- 在客户端调用 leaveRoom 使真人用户离开房间。

- 在客户端调用 destroyRTCEngine 销毁房间,释放资源。

FAQ

智能体未进房或未正常工作?

声音采集会把智能体说话内容采集到,智能体开始自问自答。

可通过降低音量增益值,减少噪音引起的 ASR 错误识别。具体参看如何提升语音识别准确性?。

发起新一轮对话时,智能体能否携带上一轮对话的上下文?

可以。在发起新一轮对话时,你可以将上一轮对话的上下文信息作为 UserPrompts 参数的值传入。

最近更新时间:2025.12.22 13:12:17

这个页面对您有帮助吗?

有用

有用

无用

无用