#include "sami_core.h" // step 1, create handle SAMICoreHandle handle; int ret = SAMICoreCreateHandleByIdentify(&handle, SAMICoreIdentify::SAMICoreIdentify_Processor_TimeDomainPitchShifter, NULL); assert(ret == SAMI_OK); // step 2, set initial paramters(option) SAMICoreProperty property; std::string param_str = R"({"parameters": {"Pitch Ratio": 1.5} })"; property.type = SAMICoreDataType_String; property.data = (void*)(param_str.c_str()); property.dataLen = param_str.size(); ret = SAMICoreSetProperty(handle, SAMICorePropertyID_Processor_SetParametersOffline, &property); assert(ret == SAMI_OK); // step 3, prepare for processing const int block_size = 512; SAMICoreProcessorPrepareParameter parameter; memset(¶meter,0,sizeof(parameter)); parameter.blockSize = block_size; parameter.sampleRate = sample_rate; property.data = &(parameter); property.id = SAMICorePropertyId::SAMICorePropertyID_Processor_Prepare; property.dataLen = sizeof(SAMICoreProcessorPrepareParameter); ret = SAMICoreSetProperty(handle, SAMICorePropertyId::SAMICorePropertyID_Processor_Prepare, &property); assert(ret == SAMI_OK); // setp 4, create input and output audio block SAMICoreAudioBuffer in_audio_buffer; in_audio_buffer.numberChannels = num_channels; in_audio_buffer.numberSamples = block_size; in_audio_buffer.data = new float *[num_channels]; SAMICoreAudioBuffer out_audio_buffer; out_audio_buffer.numberChannels = num_channels; out_audio_buffer.numberSamples = block_size; out_audio_buffer.data = new float *[num_channels]; for(int c = 0; c < int(num_channels); ++c){ in_audio_buffer.data[c] = new float[block_size]; out_audio_buffer.data[c] = new float[block_size]; } SAMICoreBlock in_block; in_block.dataType = SAMICoreDataType::SAMICoreDataType_AudioBuffer; in_block.numberAudioData = 1; in_block.audioData = &in_audio_buffer; SAMICoreBlock out_block; out_block.dataType = SAMICoreDataType::SAMICoreDataType_AudioBuffer; out_block.numberAudioData = 1; out_block.audioData = &out_audio_buffer; // step 5, process block by block for(;hasAudioSamples();) { copySamplesToInputBuffer(in_audio_buffer); int ret = SAMICoreProcess(handle, &in_block, &out_block); assert(ret == SAMI_OK); doSomethingAfterProcess(out_audio_buffer); } // step 7, remember release resource ret = SAMICoreDestroyHandle(handle); for(int c = 0; c < int(num_channels); ++c){ delete [] in_audio_buffer.data[c]; delete [] out_audio_buffer.data[c]; } delete [] in_audio_buffer.data; delete [] out_audio_buffer.data;

一、创建算法句柄

通过 processor identity 创建。这种情况用 SAMICoreIdentify_Processor_xxx_ 标识符创建。

SAMICoreHandle handle; int ret = SAMICoreCreateHandleByIdentify(&handle, SAMICoreIdentify::SAMICoreIdentify_Processor_TimeDomainPitchShifter, NULL); assert(ret == SAMI_OK);

identity对应功能见同目录下《单音效处理器介绍》的列表。

二、Prepare

在开始处理音频之前,要对算法进行准备工作(prepare),prepare 会进行一些准备工作,必须在音频处理前调用。prepare 需要输入音频采样率和 max block size,其中 max block size 表示整个处理过程中可能出现的最大音频块大小,通常这个值由设备或者使用者提供。

Prepare 会进行内存申请,因此它不能在实时线程调用。正确的做法是在主线程 prepare,然后实时线程只进行 process ,这样可以保证 realtime safe。具体细节请参考:Ross Bencina » Real-time audio programming 101: time waits for nothing

const int block_size = 512; SAMICoreProcessorPrepareParameter parameter; parameter.blockSize = block_size; parameter.sampleRate = sample_rate; property.data = &(parameter); property.id = SAMICorePropertyId::SAMICorePropertyID_Processor_Prepare; property.dataLen = sizeof(SAMICoreProcessorPrepareParameter); ret = SAMICoreSetProperty(handle, SAMICorePropertyId::SAMICorePropertyID_Processor_Prepare, &property); assert(ret == SAMI_OK);

三、设置参数

在进入主题之前,我们先要了解实时音频处理的一般流程。通常,实时音频处理场景下会有两个线程:音频线程和其他线程(例如 UI 线程)。音频线程具有较高的优先级,负责音频算法处理;其他线程优先级较低,负责参数修改或者发送音频事件。下面伪代码说明了这种交互形式:

SAMICoreHandle handle = createHandle(...); auto sample_rate = 44100.0; auto max_block_size = 512; auto audio_worker = [](){ auto ten_senconds_of_samples = sample_rate * 10; auto num_rendered_samples = 0; for(; num_rendered_samples < ten_senconds_of_samples;){ // ... fill some to in_block int ret = SAMICoreProcess(handle, &in_block, &out_block); // ... fill some output buffer } } auto gui_worker = [&] () { while (/* time elapsed is less than 10 seconds */) { //.. emplace parameter event or midi event } }; auto audio_future = std::async (std::launch::async, audio_worker); auto gui_future = std::async (std::launch::async, gui_worker); gui_future.wait(); auto_future.wait();

参数的设置可以分为两种情况:

在主线程中创建了 handle,但音频线程还 未启动 音频处理时。这种情况下,调用 离线参数 设置接口。

音频线程 已经启动 ,并正在进行音频处理时。这种情况下,调用 实时参数 设置接口

为啥要区分这两种情况?因为要保证 realtime-safe。离线参数设置接口无法保证 realtime-safe,而实时参数设置可以。

离线设置参数

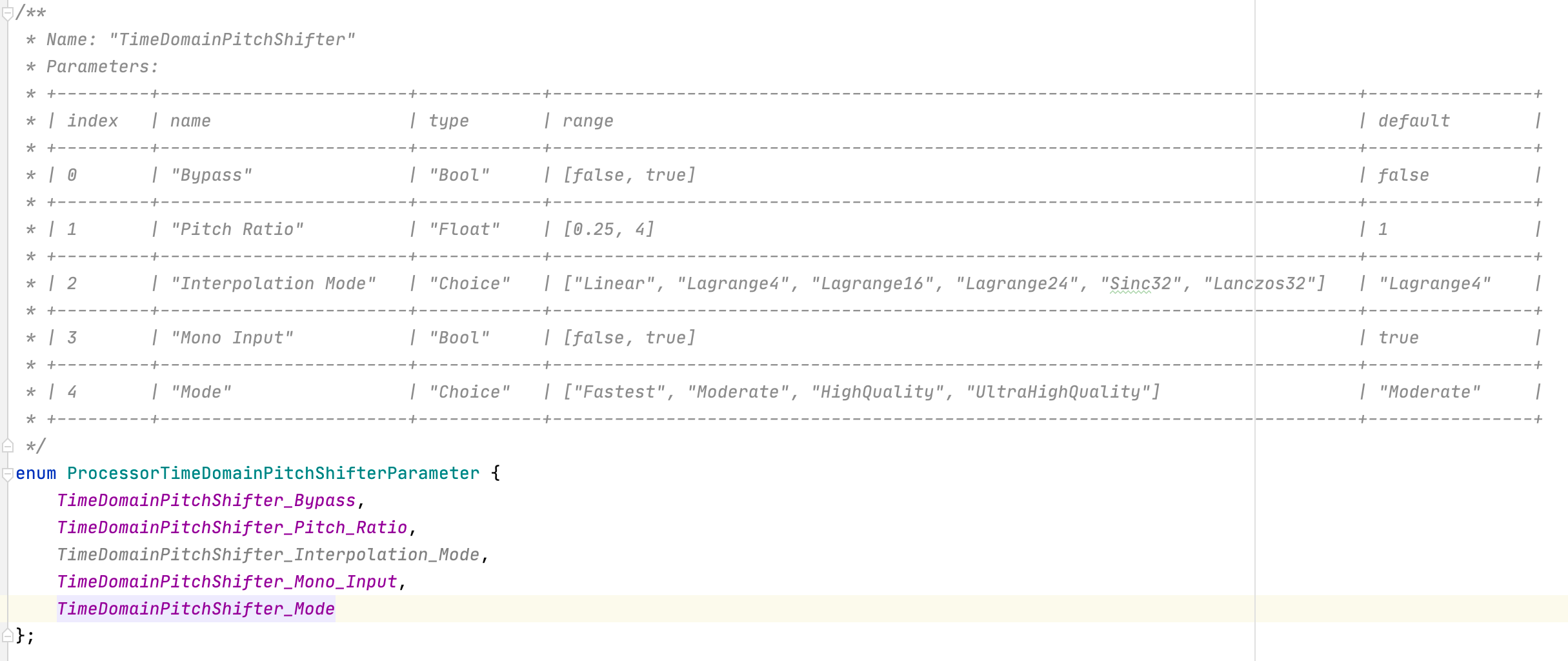

我们通过 json 字符串设置离线参数,相关 processor 的参数信息你可以在 sami_core_effect_param.h中找到。下面是一个例子:

// set pitch rate offline before prepare SAMICoreProperty property; std::string param_str = "{\"parameters\": {\"Pitch Ratio\": 1.5} }"; property.type = SAMICoreDataType_String; property.data = (void*)(param_str.c_str()); property.dataLen = param_str.size(); ret = SAMICoreSetProperty(handle, SAMICorePropertyID_Processor_SetParametersOffline, &property); assert(ret == SAMI_OK);

实时设置参数

支持实时设置参数,保证线程安全的同时避免爆音、杂音等问题。

SAMICoreContextParameterEvent param_event; memset(¶m_event,0,sizeof(param_event)); float pitch_ratio = 3.0; param_event.parameterIndex = ProcessorTimeDomainPitchShifterParameter::TimeDomainPitchShifter_Pitch_Ratio; param_event.plainValue = pitch_ratio; SAMICoreProperty prop; prop.data = ¶m_event; prop.type = SAMICoreDataType_ParameterEvent; prop.dataLen = sizeof(SAMICoreContextParameterEvent); int ret = SAMICoreSetProperty(handle,SAMICorePropertyID_Processor_ContextEmplaceParameterEventNowWithPlainValue, &prop);

SAMICoreContextParameterEvent 描述了参数的位置和值,在sami_core_effect_param.h获取详情

parameterIndex,参数的ID,

plainValue,参数值,有三种数据类型:float/bool/choice

以“TimeDomainPitchShifter”为例:

| 数据类型 | 内容 |

|---|---|

| Float | 直接填写需要的数值 |

| Bool | 0 表示 false,1 表示 true |

| Choice | "Interpolation Mode" 的 0 表示 "Linear",1 表示 "Lagrange4",以此类推 |

四、创建buffer

SAMICoreAudioBuffer,用于存放音频数据,它仅支持 Planar-Float 类型数据。更多关于音频数据格式请参看名词解释部分。

SAMICoreBlock,用于存放需要处理的数据。

SAMICoreAudioBuffer in_audio_buffer; in_audio_buffer.numberChannels = num_channels; in_audio_buffer.numberSamples = block_size; in_audio_buffer.data = new float *[num_channels]; SAMICoreAudioBuffer out_audio_buffer; out_audio_buffer.numberChannels = num_channels; out_audio_buffer.numberSamples = block_size; out_audio_buffer.data = new float *[num_channels]; for(int c = 0; c < int(num_channels); ++c){ in_audio_buffer.data[c] = new float[block_size]; out_audio_buffer.data[c] = new float[block_size]; } SAMICoreBlock in_block; in_block.dataType = SAMICoreDataType::SAMICoreDataType_AudioBuffer; in_block.numberAudioData = 1; in_block.audioData = &in_audio_buffer; SAMICoreBlock out_block; out_block.dataType = SAMICoreDataType::SAMICoreDataType_AudioBuffer; out_block.numberAudioData = 1; out_block.audioData = & out_audio_buffer;

我们还能够以 in-place 来存放音频数据,例如:

for(int c = 0; c < int(num_channels); ++c){ in_audio_buffer.data[c] = getPointFromExistMem(c); out_audio_buffer.data[c] = getPointFromExistMem(c); }

五、处理音频

拷贝数据进行处理

将待处理的音频数据拷贝到 in_audio_buffer 中,经过 SAMICoreProcess 处理后,结果将拷贝至 out_audio_buffer 中。

for(;hasAudioSamples();) { copySamplesToInputBuffer(in_audio_buffer); int ret = SAMICoreProcess(handle, &in_block, &out_block); assert(ret == SAMI_OK); doSomethingAfterProcess(out_block); }

六、释放资源

ret = SAMICoreDestroyHandle(handle);

此外,还要注意音频数据数据的内存释放(如果有)。例如:

for(int c = 0; c < int(num_channels); ++c){ delete [] in_audio_buffer.data[c]; delete [] out_audio_buffer.data[c]; } delete [] in_audio_buffer.data; delete [] out_audio_buffer.data;