背景信息

Kubernetes(简称 K8S)是一个开源的,用于管理云平台中多个主机上的容器化的应用,Kubernetes的目标是让部署容器化的应用简单并且高效,Kubernetes提供了应用部署、规划、更新、维护的一种机制。

相比于托管版K8S,自建K8S有如下优势:

- 保持版本一致性,减少维护成本:多云场景(公有云、私有云)下,不同云厂商的K8S版本和部分特性有所不同。选择自建K8S集群能够确保多公有云及私有云之间K8S的版本及特性保持一致,从而减少维护成本。

- 保持最新稳定版本:通常各个云厂商考虑到K8S的稳定性,部署版本一般会滞后于社区发出的最新稳定版。选择自建K8S则能够确保随时保持最新稳定版。

- 保持独立性和可移植性:由于各云厂商的CNI、Load balancer等实现存在区别,因此,当一个较为庞大的服务集群需要从一个云迁移到另一个云,且对这些特性有依赖时,迁移需要进行大量的部署文件修改。选择自建K8S能够减少对云厂商的依赖,保持K8S应用的独立性和可移植性。

K8S较常用的安装方式有kubespray、kubeadm、二进制部署等。本文以kubeadm方式为例,指导您在火山引擎上部署高可用版本K8S。

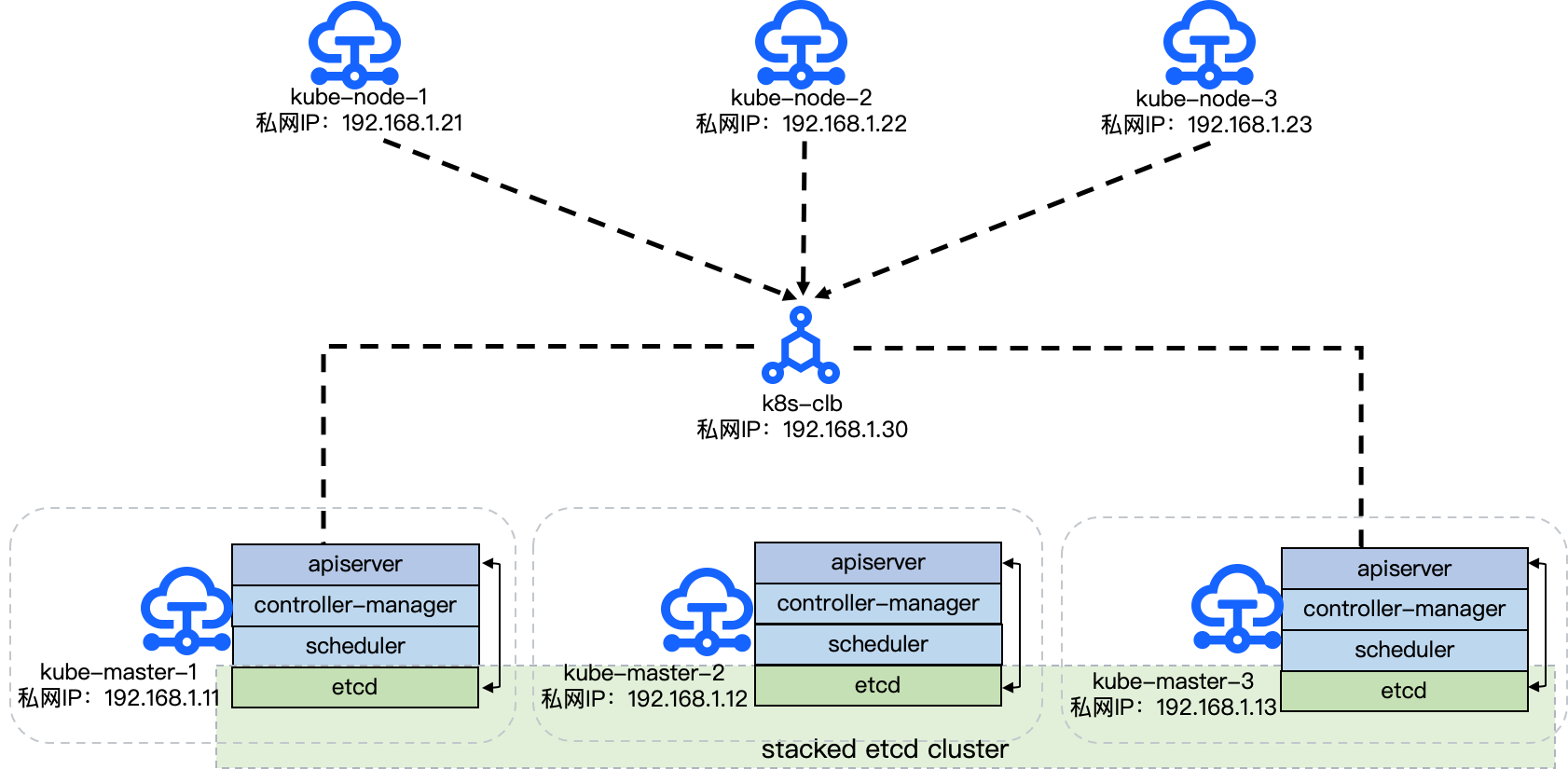

组网示意图如下图所示。

前提条件

在进行本实践前,您需要完成以下准备工作:

- 注册火山引擎账号,并完成企业实名认证。您可以登录火山引擎控制台查看是否已经完成实名认证。

- 火山引擎账户余额大于100元。您可以登录火山引擎控制台查看账户余额。

- 使用火山引擎账号开通以下产品(创建各产品使用的配置数据请参见数据规划):

数据规划

说明

此处数据为规划示例,操作时可根据实际规划进行调整。

私有网络配置数据

参数 地域名称 网络段可用区子网名称子网段取值 华北2(北京) k8s-vpc 192.168.0.0/16 可用区A k8s-cluster-subnet 192.168.1.0/24 云服务器配置数据

参数 取值

(云服务器1)取值

(云服务器2)取值

(云服务器3)取值

(云服务器4)取值

(云服务器5)取值

(云服务器6)名称 kube-master-1 kube-master-2 kube-master-3 kube-node-1 kube-node-2 kube-node-3 计费类型 按量付费 按量付费 按量付费 按量付费 按量付费 按量付费 地域 华北2(北京) 华北2(北京) 华北2(北京) 华北2(北京) 华北2(北京) 华北2(北京) 可用区 可用区A 可用区A 可用区A 可用区A 可用区A 可用区A 规格 通用型ecs.g1.xlarge(4c16g) 通用型ecs.g1.xlarge(4c16g) 通用型ecs.g1.xlarge(4c16g) 通用型ecs.g1.xlarge(4c16g) 通用型ecs.g1.xlarge(4c16g) 通用型ecs.g1.xlarge(4c16g) 镜像 公共镜像

CentOS 7.6公共镜像

CentOS 7.6公共镜像

CentOS 7.6公共镜像

CentOS 7.6公共镜像

CentOS 7.6公共镜像

CentOS 7.6系统盘 100GiB 100GiB 100GiB 100GiB 100GiB 100GiB 负载均衡配置数据

参数 名称 区域 网络类型 私有网络 子网 规格 取值 k8s-clb 华北2(北京) 私网 k8s-vpc k8s-cluster-subnet 小型I NAT网关配置数据

参数 名称 地域 私有网络 子网 规格 取值 k8s-nat 华北2(北京) k8s-vpc k8s-cluster-subnet 小型I

安装准备

为了更加快速部署K8S集群,本实践中大部分操作使用Ansible批量操作,减少各个节点来回切换的时间。

- 在kube-master-1机器上配置免密登录到集群中主机。

- 使用ECS Terminal或远程连接工具,登录到kube-master-1的命令行界面。

- 执行

vi /etc/hosts命令,打开hosts文件。 - 按 i 进入编辑模式,在hosts文件末尾添加节点解析,内容如下所示。

192.168.1.11 kube-master-1 192.168.1.12 kube-master-2 192.168.1.13 kube-master-3 192.168.1.21 kube-node-1 192.168.1.22 kube-node-2 192.168.1.23 kube-node-3 - 按 Esc 退出编辑模式,输入 :wq 保存并退出。

- 执行

cat /etc/hosts命令,查看修改后的hosts文件,确保内容正确。 - 运行如下命令,生成ssh key。

[root@kube-master-1 ~]# ssh-keygen -t rsa -P ''说明

ssh-keygen命令为ssh生成、管理和转换认证密钥,它支持RSA和DSA两种认证密钥。ssh密钥默认保留在

~/.ssh目录中。 - 执行如下命令,配置从kube-master-1节点到其它节点的免密登录。

[root@kube-master-1 ~]# ssh-copy-id root@kube-master-1 [root@kube-master-1 ~]# ssh-copy-id root@kube-master-2 [root@kube-master-1 ~]# ssh-copy-id root@kube-master-3 [root@kube-master-1 ~]# ssh-copy-id root@kube-node-1 [root@kube-master-1 ~]# ssh-copy-id root@kube-node-2 [root@kube-master-1 ~]# ssh-copy-id root@kube-node-3

- 在kube-master-1机器上安装并配置ansible。

说明

ansible是一个配置管理和应用部署工具,基于Python开发,集合了众多运维工具(Pupet、Cfengine、Chef、Saltstack)的优点。

epel是由Fedora社区打造的一个第三方源。ansible是放在epel源里,在安装ansible之前需要先安装epel 。- 执行如下命令安装epel和ansible。

yum install epel-release -y yum install ansible -y - 执行

vi /etc/ansible/hosts命令,打开ansible配置文件。 - 按 i 进入编辑模式,在配置文件中添加如下内容。

[k8smasters] kube-master-1 ansible_ssh_user=root kube-master-2 ansible_ssh_user=root kube-master-3 ansible_ssh_user=root [k8snodes] kube-node-1 ansible_ssh_user=root kube-node-2 ansible_ssh_user=root kube-node-3 ansible_ssh_user=root [k8shosts] kube-master-1 ansible_ssh_user=root kube-master-2 ansible_ssh_user=root kube-master-3 ansible_ssh_user=root kube-node-1 ansible_ssh_user=root kube-node-2 ansible_ssh_user=root kube-node-3 ansible_ssh_user=root - 按 Esc 退出编辑模式,输入 :wq 保存并退出。

- 检查ansible安装结果并将hosts文件拷贝到其它节点。

ansible k8shosts -m ping ansible k8shosts -m copy -a 'src=/etc/hosts dest=/etc/'

- 执行如下命令安装epel和ansible。

- 在所有节点上安装docker。

K8S可选的runtime有很多,比如containerd、kata container、docker等,本实践使用docker作为K8S的runtime,也是目前的主流。- 通过ansible添加docker repo。

ansible k8shosts -m yum -a 'name=yum-utils state=latest' ansible k8shosts -m shell -a 'yum-config-manager --add-repo https://mirrors.ivolces.com/docker/linux/centos/docker-ce.repo' - 通过ansible在所有节点安装docker。

ansible k8shosts -m yum -a 'name=docker-ce state=latest' ansible k8shosts -m service -a 'name=docker state=restarted enabled=yes' - 修改docker参数,启动docker。

说明

这里的docker参数可根据需要自行修改。

[root@kube-master-1 ~]# cat << EOF >/etc/docker/daemon.json { "exec-opts": ["native.cgroupdriver=systemd"], "log-driver": "json-file", "log-opts": { "max-size": "100m" }, "storage-driver": "overlay2", "storage-opts": [ "overlay2.override_kernel_check=true" ] } EOF [root@kube-master-1 ~]# ansible k8shosts -m copy -a 'src=/etc/docker/daemon.json dest=/etc/docker/daemon.json' [root@kube-master-1 ~]# ansible k8shosts -m service -a 'name=docker state=restarted enabled=yes' - 修改各个节点的系统参数,开启网络转发以及设置iptables不对bridge的数据进行处理。

[root@kube-master-1 ~]# cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf br_netfilter EOF [root@kube-master-1 ~]# cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-iptables = 0 net.ipv4.ip_forward = 1 EOF sudo sysctl --system [root@kube-master-1 ~]# ansible k8shosts -m copy -a 'src=/etc/modules-load.d/k8s.conf dest=/etc/modules-load.d/k8s.conf' [root@kube-master-1 ~]# ansible k8shosts -m copy -a 'src=/etc/sysctl.d/k8s.conf dest=/etc/sysctl.d/k8s.conf' [root@kube-master-1 ~]# ansible k8shosts -m shell -a 'sudo sysctl --system'

- 通过ansible添加docker repo。

配置步骤

节点上的组件(apiserver/controller-manager/scheduler/etcd)均通过静态pod方式运行,静态pod直接由节点上的kubelet程序进行管理,不需要apiserver介入,静态pod也不需要关联任何RS,完全是由kubelet程序来监控,当kubelet发现静态pod停止的时候,会重新启动静态pod。

- 安装K8S包。

- 配置kubernetes repo。

[root@kube-master-1 ~]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.ivolces.com/kubernetes/yum/kubernetes-el7-x86_64 enabled=1 gpgcheck=0 #repo_gpgcheck=1 gpgkey=https://mirrors.ivolces.com/kubernetes/yum/doc/yum-key.gpg EOF [root@kube-master-1 ~]# ansible k8shosts -m copy -a 'src=/etc/yum.repos.d/kubernetes.repo dest=/etc/yum.repos.d/kubernetes.repo' - 安装相关软件包。

ansible k8shosts -m yum -a 'name=kubelet-1.19.8,kubeadm-1.19.8,kubectl-1.19.8 state=latest'

- 配置kubernetes repo。

- 安装master节点。

- 执行如下命令,在“/etc/hosts”文件中加入apiserver地址,并复制文件到其它节点。

[root@kube-master-1 ~]# echo '127.0.0.1 apiserver' >>/etc/hosts [root@kube-master-1 ~]# ansible k8smasters -m copy -a 'src=/etc/hosts dest=/etc/' - 执行如下命令,生成配置文件。

[root@kube-master-1 ~]# kubeadm config print init-defaults > kubeadm-init.yaml [root@kube-master-1 ~]# cat kubeadm-init.yaml apiVersion: kubeadm.k8s.io/v1beta2 bootstrapTokens: - groups: - system:bootstrappers:kubeadm:default-node-token token: abcdef.0123456789abcdef ttl: 24h0m0s usages: - signing - authentication kind: InitConfiguration localAPIEndpoint: advertiseAddress: 192.168.1.11 bindPort: 6443 nodeRegistration: criSocket: /var/run/dockershim.sock name: kube-master-1 taints: - effect: NoSchedule key: node-role.kubernetes.io/master --- apiServer: timeoutForControlPlane: 4m0s apiVersion: kubeadm.k8s.io/v1beta2 certificatesDir: /etc/kubernetes/pki clusterName: kubernetes controllerManager: {} dns: type: CoreDNS etcd: local: dataDir: /var/lib/etcd imageRepository: cr-cn-beijing.ivolces.com/volc controlPlaneEndpoint: "apiserver:6443" kind: ClusterConfiguration kubernetesVersion: v1.19.8 networking: dnsDomain: cluster.local serviceSubnet: 10.2.0.0/16 podSubnet: 10.3.0.0/16 scheduler: {} - 拉取镜像。

[root@kube-master-1 ~]# kubeadm config images pull --config kubeadm-init.yaml W0913 17:18:04.862188 31710 configset.go:348] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io] [config/images] Pulled cr-cn-beijing.ivolces.com/volc/kube-apiserver:v1.19.8 [config/images] Pulled cr-cn-beijing.ivolces.com/volc/kube-controller-manager:v1.19.8 [config/images] Pulled cr-cn-beijing.ivolces.com/volc/kube-scheduler:v1.19.8 [config/images] Pulled cr-cn-beijing.ivolces.com/volc/kube-proxy:v1.19.8 [config/images] Pulled cr-cn-beijing.ivolces.com/volc/pause:3.2 [config/images] Pulled cr-cn-beijing.ivolces.com/volc/etcd:3.4.13-0 [config/images] Pulled cr-cn-beijing.ivolces.com/volc/coredns:1.7.0 - 通过kubeadm初始化K8S集群。

[root@kube-master-1 ~]# kubeadm init --config=kubeadm-init.yaml --upload-certs Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ You can now join any number of the control-plane node running the following command on each as root: kubeadm join apiserver:6443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:d6ea8cdf2777f087aca6001ca4eeeb5898e4159a3e6adeac41c3d72462a46002 \ --control-plane --certificate-key b9c051d48267c9d0552fb237040ef95cbcd49d04fae4964a17b3d1f5c9b09c10 Please note that the certificate-key gives access to cluster sensitive data, keep it secret! As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use "kubeadm init phase upload-certs --upload-certs" to reload certs afterward. Then you can join any number of worker nodes by running the following on each as root: kubeadm join apiserver:6443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:d6ea8cdf2777f087aca6001ca4eeeb5898e4159a3e6adeac41c3d72462a46002 [root@kube-master-1 ~]# mkdir -p $HOME/.kube [root@kube-master-1 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config [root@kube-master-1 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config - 安装flannel网络插件。

说明

kubeadm默认情况下并不会安装一个网络方案,所以kubeadm安装完成后,需要自行安装网络插件,本文采用flannel的网络方案进行部署。

# 下载网络插件 [root@kube-master-1 ~]# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml # 替换 flannel 默认的镜像地址 sed -i s#quay.io/coreos#cr-cn-beijing.volces.com/volc#g kube-flannel.yml # 修改网络配置 [root@kube-master-1 ~]# vi kube-flannel.yml 126 net-conf.json: | 127 { 128 "Network": "10.3.0.0/16", # 和上文 podSubnet 字段一致 129 "Backend": { 130 "Type": "vxlan" 131 } 132 } # 创建flannel [root@kube-master-1 ~]# kubectl apply -f kube-flannel.yml - 添加其它两台master节点。

# 拉取镜像 [root@kube-master-1 ~]# ansible kube-master-2 -m shell -a 'kubeadm config images pull --image-repository=cr-cn-beijing.ivolces.com/volc --kubernetes-version=1.19.8' [root@kube-master-1 ~]# ansible kube-master-3 -m shell -a 'kubeadm config images pull --image-repository=cr-cn-beijing.ivolces.com/volc --kubernetes-version=1.19.8' # join 到集群中 (接入命令及参数可在上步初始化集群时查看到) [root@kube-master-1 ~]# ansible kube-master-2 -m shell -a 'kubeadm join kube-master-1:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:d6ea8cdf2777f087aca6001ca4eeeb5898e4159a3e6adeac41c3d72462a46002 --control-plane --certificate-key b9c051d48267c9d0552fb237040ef95cbcd49d04fae4964a17b3d1f5c9b09c10' [root@kube-master-1 ~]# ansible kube-master-3 -m shell -a 'kubeadm join kube-master-1:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:d6ea8cdf2777f087aca6001ca4eeeb5898e4159a3e6adeac41c3d72462a46002 --control-plane --certificate-key b9c051d48267c9d0552fb237040ef95cbcd49d04fae4964a17b3d1f5c9b09c10'

- 执行如下命令,在“/etc/hosts”文件中加入apiserver地址,并复制文件到其它节点。

- 添加node节点。

- 配置hosts,添加负载均衡地址到所有node节点。

[root@kube-master-1 ~]# ansible k8snodes -m shell -a "echo 192.168.1.30 apiserver >>/etc/hosts" - 拉取镜像。

[root@kube-master-1 ~]# ansible k8snodes -m shell -a 'kubeadm config images pull --image-repository=cr-cn-beijing.ivolces.com/volc --kubernetes-version=1.19.8' - 添加node节点。

[root@kube-master-1 ~]# ansible k8snodes -m shell -a "kubeadm join apiserver:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:d6ea8cdf2777f087aca6001ca4eeeb5898e4159a3e6adeac41c3d72462a46002"

- 配置hosts,添加负载均衡地址到所有node节点。

部署验证

验证 K8S 集群部署结果

- 在kube-master-1上查看已部署好的etcd状态。

[root@kube-master-1 ~]# kubectl -n kube-system exec etcd-kube-master-1 -- etcdctl --endpoints=https://192.168.1.11:2379,https://192.168.1.12:2379,https://192.168.1.13:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key endpoint status --write-out=table +---------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ | ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS | +---------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ | https://192.168.1.11:2379 | aa869cb0f2e7ed31 | 3.4.13 | 3.0 MB | true | false | 4 | 5758 | 5758 | | | https://192.168.1.12:2379 | dca639dd4f0c7455 | 3.4.13 | 2.9 MB | false | false | 4 | 5758 | 5758 | | | https://192.168.1.13:2379 | 5e7a592e55ecd721 | 3.4.13 | 2.9 MB | false | false | 4 | 5758 | 5758 | | +---------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ - 查看集群中node的状态。

[root@kube-master-1 ~]# kubectl get node -owide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME kube-master-1 Ready master 25m v1.19.8 192.168.1.11 <none> CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 docker://20.10.8 kube-master-2 Ready master 22m v1.19.8 192.168.1.12 <none> CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 docker://20.10.8 kube-master-3 Ready master 16m v1.19.8 192.168.1.13 <none> CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 docker://20.10.8 kube-node-1 Ready <none> 58s v1.19.8 192.168.1.21 <none> CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 docker://20.10.8 kube-node-2 Ready <none> 58s v1.19.8 192.168.1.22 <none> CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 docker://20.10.8 kube-node-3 Ready <none> 58s v1.19.8 192.168.1.23 <none> CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 docker://20.10.8 - 部署nginx应用。

[root@kube-master-1 ~]# kubectl create deployment nginx-deployment --image=nginx --replicas=3 deployment.apps/nginx-deployment created [root@kube-master-1 ~]# kubectl get po -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-deployment-84cd76b964-8klxh 1/1 Running 0 2m1s 10.3.4.2 kube-node-3 <none> <none> nginx-deployment-84cd76b964-czt89 1/1 Running 0 2m1s 10.3.5.2 kube-node-2 <none> <none> nginx-deployment-84cd76b964-lxbnx 1/1 Running 0 2m1s 10.3.3.2 kube-node-1 <none> <none>

验证K8S集群可用性

- 下载ingress-controller的yaml文件,用pod形式部署ingress controller。

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v0.48.1/deploy/static/provider/cloud/deploy.yaml - 修改pod反亲和性,将ingress-controller pod分散于多个节点上,保证业务高可用。

[root@kube-master-1 ~]# vi deploy.yaml spec: affinity: podAntiAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchExpressions: - key: app.kubernetes.io/name operator: In values: - ingress-nginx topologyKey: "kubernetes.io/hostname" tolerations: - key: node-role.kubernetes.io/master operator: Exists effect: NoSchedule dnsPolicy: ClusterFirst - 修改镜像源为火山引擎镜像。

containers: - name: controller image: cr-cn-beijing.ivolces.com/volc/ingress-nginx:v0.48.1 imagePullPolicy: IfNotPresent lifecycle: preStop: exec: command: - /wait-shutdown - 创建ingress-nodeport yaml。

[root@i-3tld0tp3564e8i4rk28m ~]# vim service.yaml apiVersion: v1 kind: Service metadata: annotations: labels: helm.sh/chart: ingress-nginx-3.34.0 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.48.1 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx-controller namespace: ingress-nginx spec: type: NodePort ports: - name: http port: 80 protocol: TCP targetPort: http nodePort: 30080 - name: https port: 443 protocol: TCP targetPort: https nodePort: 30443 selector: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/component: controller - 提交yaml文件,创建ingress对应的服务。

[root@kube-master-1 ~]# kubectl apply -f deploy.yaml [root@kube-master-1 ~]# kubectl apply -f service.yaml [root@kube-master-1 ~]# kubectl get pod -o wide -n ingress-nginx NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES ingress-nginx-admission-create-k4tjt 0/1 Completed 0 47m 10.3.3.2 i-3tld0tp3564e8i4rk28p <none> <none> ingress-nginx-admission-patch-m72xl 0/1 Completed 0 47m 10.3.3.3 i-3tld0tp3564e8i4rk28p <none> <none> ingress-nginx-controller-f6b475f57-56lsm 1/1 Running 0 4m26s 10.3.0.8 i-3tld0tp3564e8i4rk28m <none> <none> ingress-nginx-controller-f6b475f57-kxtjr 1/1 Running 0 4m7s 10.3.2.3 i-3tld0tp3564e8i4rk28o <none> <none> ingress-nginx-controller-f6b475f57-vnjt4 1/1 Running 0 3m37s 10.3.1.3 i-3tld0tp3564e8i4rk28n <none> <none> [root@kube-master-1 ~]# kubectl get svc -n ingress-nginx NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE ingress-nginx-controller NodePort 10.2.113.37 <none> 80:30080/TCP,443:30443/TCP 54m ingress-nginx-controller-admission ClusterIP 10.2.42.159 <none> 443/TCP 54m - 配置火山引擎负载均衡器及安全组规则。

- 登录负载均衡控制台。

- 在负载均衡实例列表中,单击已创建的实例名称k8s-clb,进入实例详情页。

- 在“后端服务器组”页签,添加如下服务器组(k8s-ingress-servergroup)。

- 在“监听器”页签,添加如下监听器(k8s-ingress-listener)。

- 在“后端服务器组”页签,单击后端服务器名称进入详情页,单击安全组链接,进入安全组界面,配置端口开放。

- 创建 ingress 规则。

- 部署 WordPress 测试应用。

- 创建 MySQL。

[root@kube-master-1 ~]# cat mysql-db.yaml apiVersion: v1 kind: Service metadata: name: wordpress-mysql labels: app: wordpress spec: type: ClusterIP clusterIP: None ports: - port: 3306 selector: app: wordpress tier: mysql --- apiVersion: apps/v1 # for versions before 1.9.0 use apps/v1beta2 kind: Deployment metadata: name: wordpress-mysql labels: app: wordpress spec: selector: matchLabels: app: wordpress tier: mysql template: metadata: labels: app: wordpress tier: mysql spec: containers: - image: mysql:5.6 name: mysql env: - name: MYSQL_ROOT_PASSWORD value: "123456" ports: - containerPort: 3306 name: mysql - 创建 WordPress。

[root@kube-master-1 ~]# cat wordpress.yaml apiVersion: v1 kind: Service metadata: name: wordpress labels: app: wordpress spec: type: ClusterIP ports: - port: 80 selector: app: wordpress tier: frontend --- apiVersion: apps/v1 # for versions before 1.9.0 use apps/v1beta2 kind: Deployment metadata: name: wordpress labels: app: wordpress spec: selector: matchLabels: app: wordpress tier: frontend template: metadata: labels: app: wordpress tier: frontend spec: containers: - image: cr-cn-beijing.ivolces.com/volc/wordpress:4.8-apache name: wordpress env: - name: WORDPRESS_DB_HOST value: wordpress-mysql - name: WORDPRESS_DB_PASSWORD value: "123456" ports: - containerPort: 80 name: wordpress

- 创建 MySQL。

- 创建 ingress 规则。

[root@kube-master-1 ~]# cat ingress-wordpress-db.yaml apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: wordpress-ingress annotations: nginx.ingress.kubernetes.io/rewrite-target: / spec: rules: - http: paths: - path: / pathType: Prefix backend: service: name: wordpress port: number: 80 - 通过浏览器访问 http://<负载均衡器IP> /,出现下图所示的页面表示WordPress部署成功。

- 部署 WordPress 测试应用。

名词解释

| 名词 | 说明 |

|---|---|

| 云服务器 | 云服务器ECS(Elastic Compute Service)是一种简单高效、处理能力可弹性伸缩的计算服务。ECS帮助您构建更稳定、安全的应用,提升运维效率,降低IT成本,使您更专注于核心业务创新。 |

| 私有网络 | 私有网络VPC(Virtual Private Cloud)是用户基于火山引擎创建的自定义私有网络,不同的私有网络之间二层逻辑隔离,用户可以在自己创建的私有有网络内创建和管理云产品实例,比如ECS、负载均衡、RDS等。 |

| 负载均衡 | 负载均衡CLB是多台云服务器进行流量分发的负载均衡服务,可以通过流量分发扩展应用系统对外的服务能力,通过消除单点故障提升应用系统的可用性。 |